How I use Emacs to keep a developer journal

Why keep a developer journal?

I won't expand on what a developer journal is, or on what the benefits of keeping a developer journal are; there are plenty of resources for that online (What is a Developer Diary, What is a developer journal?, You should keep a developer’s journal, Everything Starts with a Note-taking System). But let me tell you what made me start keeping a developer journal.

You see, I am one of those people that like to start each day from a blank page. This means that at the end of the work day I shut down my computer such that at the beginning of the next day I can start anew. The problem with starting anew each day is that you don't always get to finish everything by the end of the day. And in this case, starting anew the next day is, so to say, nothing else than loading state onto blank memory (I know; I am a nerd).

This is where keeping a developer journal shines for me. I log in my developer journal all the useful snippets that I employ whilst working on a task, be it a SQL query, an URL which references some documentation, a milestone, a summary of a conversation with a colleague, an Eureka! moment, or a small progress — anything that can help me restore the context at the current moment in the future. And the great part is that when I say future I don't necessarily mean tomorrow, or Monday — I mean months into the future.

Be aware however, that I am limiting the time frame to months only. As years go by, time takes its toll and it is harder and harder to recall the exact context of a specific task, regardless of how detailed the journal logs are.

Why Emacs?

For some time already I have been using org-agenda to track the time I spend on each of my tasks in the most simple way possible: I add each task to a file from which org-agenda reads its data, call org-clock-in on that task when I start working on it, and call org-clock-out when I am not working on that task anymore.

Naturally, I wanted to build up from there. Also, I wanted to start with something simple that would allow me to do what I want and improve the workflow afterwards. In other words, and as cliché it may sound, I wanted to be agile in setup — start small and let it evolve in the right direction. And, to the best of my knowledge, Emacs is the only piece of software that provides this flexibility.

The setup

I do use org-roam which would have fit the requirements for a developer journal setup. However, I refrained from using it for a few reasons:

- I wanted to keep everything in a single file because I liked the idea of how

org-capturecan file entries under a date tree. - I would have polluted my Zettelkasten because I may need to create multiple entries for a single task which would not have been linked to the rest of the knowledge graph.

- I wanted to keep my developer journal separated from the Zettelkasten.

As such, once I excluded org-roam from the list of candidates, I got back to the basics — an org-capture template. But a simple capture template was not enough. During a work day I can switch between multiple tasks, and before switching from one task to another I would log the latest state of affairs for the current task into my developer journal. As such, I would need a way to distinguish between which tasks are referenced in my logs.

After digging a bit through the documentation, I found a simple solution to this problem — store a link to the current development task, and use that link in the capture template.

The org package already provides a function for this — org-store-link; so all I had to do was to add a key-binding to it — C-c l in my case:

(use-package org

:defer t

:bind (:map global-map

("C-c c" . org-capture)

("C-c a" . org-agenda)

:map org-mode-map

("C-c l" . org-store-link)

:map org-agenda-mode-map

("C-c l" . org-store-link)))

Unbeknownst to me, this solution proved to be a really good fit into my workflow, but more on this in the next section.

At this point, my setup was ready; after storing a link to the task I was currently working on, I was able to capture whatever bits of information I considered relevant for the task at hand. However, there were some nuisances in how the capture environment presented itself so, after digging through the documentation again I found out that I can provide a function to setup the environment before starting the capture. So I created the following function to do just that — configure the capture environment as I like it:

- start

olivetti-modewith a body width of 120 to avoid line wrap in source code blocks, - change the dictionary to US English, and start

flyspell-mode(I try to have as little spelling mistakes as I can) - last but not least, delete other windows to avoid clutter.

(defun rp/setup-work-journal-capture()

"Prepare an environment for capturing work journal entries."

(progn

(olivetti-mode)

(setq-local olivetti-body-width 120)

(ispell-change-dictionary "en_US")

(flyspell-mode 1)

(delete-other-windows)))

With all the above in place, this is how my final capture template looks like:

(add-to-list

org-capture-templates

'(("w" "Work related items")

("wj" "Journal entry"

entry

(file+olp+datetree "~/org/gtd/work.org" "Journal")

"* %a \n%U\n\n%?"

:hook rp/setup-work-journal-capture)))

The workflow

As I mentioned in the previous section, this setup proved to be a really good fit for my workflow, and became a seamless extension to it because I was using org-agenda and org-clock to schedule my tasks and track the time spent on each of them.

While my previous workflow was: add the task to org-agenda, schedule it, and use org-clock-in and org-clock-out to track the time, the new workflow is as follows:

- Add the task to

org-agendaand schedule it properly using a capture template - Call

org-clock-inwhen starting to work on a task (bound toIin the*Org Agenda*buffer) - When I want to add a new developer entry for that task, I:

- switch to the

*Org Agenda*buffer usingC-x b(orM-x switch-to-buffer) - in the agenda buffer I call

org-clock-go-toby pressingC-c C-x C-j, then:-

C-lto store the link to the current item -

C-c cto startorg-capture -

w jto select the developer journal capture template - input all the important information

-

C-c C-cto finalize the capture.

-

- switch to the

Although it may seem complicated at first, I felt no resistance in internalizing this workflow because it became a natural extension of the previous workflow. And yes, there are a lot of keys to press. But for someone who types a lot (as all developers do), pressing all those keys takes a very small amount of time but the benefit it brings is huge.

My developer journal

I write several entries per day in my developer journal and after keeping it for a year already I can say for sure that this habit improved my life as a developer in several ways. Besides being able to quickly start each day from a blank page, it forces me to think beforehand instead of just diving head-first into the implementation. As a result of this, my implementation has less kludge and is easier to modify/maintain. Furthermore, my developer journal helps the entire team because I am able to extract from it information that goes into the project wiki. Last but not least, I feel that my writing skills have improved as a result of keeping a developer journal.

The excerpt is from a blog post about how the author uses Emacs to keep a developer journal. They explain why they find it helpful to keep a developer journal and why they chose to use Emacs for this purpose. They also describe their Emacs setup for keeping a developer journal, including the key bindings and functions they use. They conclude by discussing the benefits of keeping a developer journal.

Handling automatic update of concurrency tokens in Entity Framework Core

Introduction

If your application uses Entity Framework Core and has a business logic that is of medium or higher complexity, at some point, you may want to bake into that business logic some mechanism for handling concurrency when persisting the data into the database.

If your software uses SQL Server as the database management system then things are really simple. All you need to do in to implement the following steps:

- Create a

RowVersionproperty in your entities, - Configure that property as a concurrency token in the entity configuration, and

- Run the migration scripts.

Once all of the above are done the concurrency management works out of the box.

However, in any other circumstances the things get complicated. This post is about one of those other circumstances, namely when the database management system is Oracle Server, and the version property is an integer value.

Automatic update of concurrency tokens

The hint on how to approach such use-case lies buried in the documentation, behind a simple link, which contains the keyword for the actual solution: use an interceptor when saving changes to automatically update the value of the property that holds the concurrency token. And since that documentation suffers from too much obscurity for my taste, below are the steps on how to achieve the same goal.

Prerequisites

Let's say we have an entity named Person in our code-base with the following structure:

public class Person

{

public long Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public int Version { get; set; }

}

where we want the property Version to act as a concurrency token which will be updated automatically each time the data of the entity is updated.

If we have more than one entity for which we want to implement the automatic update of concurrency tokens we can extract an interface to define the concurrency token property:

public interface IConcurrencyAwareEntity

{

int Version { get; set; }

}

After defining the interface, we use it to mark all desired entities as being concurrency aware:

public class Person : IConcurrencyAwareEntity

{

public long Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public int Version { get; set; }

}

Entity configuration

We need to tell Entity Framework Core to enable the concurrency checking mechanism for the Person entity by specifying that the Version property is a concurrency token in the entity configuration:

internal class PersonEntityConfiguration

: IEntityTypeConfiguration<Person>

{

public void Configure(EntityTypeBuilder<Person> builder)

{

// ...

builder.Property(p => p.Version)

.HasColumnName("VERSION")

.HasColumnType("NUMBER")

.IsConcurrencyToken();

}

}

Interceptor implementation

With the entity properly configured, it is time to implement the interceptor that will increment the value of the Version property automatically when the entity is saved into the database.

To do so, we need to create a class that derives from the SaveChangesInterceptor class. This class will override the SavingChanges, and SavingChangesAsync methods in order to:

- iterate through all updated or inserted items from the change tracker of the database context,

- for each entity, check if it implements the

IConcurrencyAwareEntityinterface - if yes — increment the

Versionproperty.

internal class ConcurrencyEntitySaveChangesInterceptor

: SaveChangesInterceptor

{

public override InterceptionResult<int> SavingChanges(

DbContextEventData eventData,

InterceptionResult<int> result)

{

this.IncrementVersionOfConcurrencyAwareEntities(

eventData.Context);

return base.SavingChanges(eventData, result);

}

public override ValueTask<InterceptionResult<int>>

SavingChangesAsync(

DbContextEventData eventData,

InterceptionResult<int> result,

CancellationToken cancellationToken = default)

{

this.IncrementVersionOfConcurrencyAwareEntities(

eventData.Context);

return base.SavingChangesAsync(

eventData,

result,

cancellationToken);

}

private void IncrementVersionOfConcurrencyAwareEntities(

DbContext context)

{

foreach(var entry in GetEntriesToUpdate(context))

{

var entity = entry.Entity as IConcurrencyAwareEntity;

if(entry.State is EntityState.Added)

{

entity.Version = 1;

continue;

}

var version = entry.Property(nameof(entity.Version));

version.OriginalValue = entity.Version;

entity.Version++;

}

}

private IEnumerable<EntityEntry> GetEntriesToUpdate(

DbContext context) =>

context.ChangeTracker.Entries()

.Where(e => e.Entity is IConcurrencyAwareEntity)

.Where(e => e.State == EntityState.Added ||

e.State == EntityState.Modified);

}

While the method GetEntriesToUpdate() from above is self-explanatory, the method IncrementVersionOfConcurrencyAwareEntities() requires a bit of commentary on what it does to each EntityEntry instance.

If the entity does not exist in the database (i. e. when the corresponding entry has the state EntityState.Added) we just need to set the value of the property Version to 1, and nothing else because when the entity will be persisted Entity Framework Core will issue an INSERT statement.

However, when an entity that has concurrency handling enabled needs to be persisted, and it exists in the database already, when issuing the UPDATE statement Entity Framework Core will include in the WHERE clause a condition on the value of the concurrency token alongside the primary key of the entity. In the case of the Person entity declared above, the UPDATE statement would look like this:

update persons

set first_name = 'John',

last_name = 'Doe',

version = 3

where id = 42

and version = 2

The value 2 in the example above is the value that the Version property had when the entity was loaded from the database. This is the reason why the method IncrementVersionOfConcurrencyAwareEntities contains these two lines:

var version = entry.Property(nameof(entity.Version));

version.OriginalValue = entity.Version;

These lines signal Entity Framework Core that it should update the row that has the value of the Version property equal to the value it had before incrementing it.

Add the interceptor to the DbContext

Now that we have an interceptor to automatically increment the value of the Version property, all we need to do is to add it to the database context. This is done quite easily:

- Register your interceptor class in the dependency injection framework

-

Inject the interceptor into your

DbContextclasspublic PersonsDbContext( ConcurrencyEntitySaveChangesInterceptor interceptor) { this.interceptor = interceptor; }

-

Add the interceptor to the context class in

OnConfiguringmethodprotected override void OnConfiguring( DbContextOptionsBuilder optionsBuilder) { base.OnConfiguring(optionsBuilder); optionsBuilder.AddInterceptors(this.interceptor); }

Once the interceptor is registered, you should see the value of the Version property increase automatically each time an entity marked with IConcurrencyAwareEntity interface is updated.

Concurency handling

At this point concurrency handling kicks-in out of the box thanks to the two aforementioned lines from the IncrementVersionOfConcurrencyAwareEntities method:

var version = entry.Property(nameof(entity.Version));

version.OriginalValue = entity.Version;

The lines above encapsulate the essence of the concurrency handling mechanism. They signal Entity Framework (in a bit of a contrived way admittedly) that we want to update the entity if and only if the entity in the database has the value of the concurrency token (Version property in our case) equal to the original value of the property when the entity was loaded, i.e. the value of the Version property before incrementing it.

If there is no row in the database table that satisfies the condition above, then no rows are updated, and subsequently Entity Framework Core throws a DbUpdateConcurrencyException which, as its name tells, signals that the row we are trying to update was changed in the meanwhile.

Conclusion

Entity Framework Core has a built-in mechanism for handling concurrency that works great out of the box when the application uses the default types for concurency tokens, and SQL Server as the database management system. This mechanism relies on comparing the values of the concurrency token for each database operation; if the value supplied by the application is not the same as the value from the database this is considered to be a concurrency conflict.

But once you stray off the beaten path, things become more complicated as this post shows while trying to provide a guide on how to automatically update the value of the concurrency tokens in non-default scenarios. Fortunately, the concurrency handling mechanism from Entity Framework Core is designed to accommodate a wide range of use-cases, and with a slight adjustment through a custom interceptor that updates the value of concurrency tokens when saving entities to the database, we can ensure the conflict handling mechanism operates as originally intended

Introducing the Specialized Workers pattern

Introduction

Some years ago, in my blog post about using enums in C# I mentioned that I like using what I called at that time specialized builders to refactor the switch statement into a series of classes that implement a common interface and are specialized for a specific case of the switch statement.

For the past few years I have been showing to my colleagues a slightly improved version of this approach (the topic of this post) and they all seem to agree that this is indeed a cleaner, and more elegant approach which, unlike the infamous switch statement does abide by the Open/Closed principle.

As such, I am excited to introduce to the world the Specialized Workers pattern.

Specialized Workers Pattern

Description and usage

The Specialized Workers pattern aims to distribute the logic of a business operation that requires choosing between one or multiple tasks to be executed, into a collection of specialized classes, while keeping the knowledge about the task(s) to be executed into a single place.

Under these circumstances each worker knows about the data just enough to know whether it can accomplish the task, and how to accomplish it.

Usually (at least from my experience), the decision to delegate a certain sub task was made by the unit that is in charge of delegating. In an abstract representation the code would look something like this:

if(SomeCondition())

{

DoWorkForConditionAbove();

}

where the method that contains the snippet above delegates the processing to the DoWorkForConditionAbove() method.

However, when the decision whether to delegate to a specific unit or not depends on the data that is to be processed, taking that decision couples both the delegator, and the worker unit to the structure of the data.

It is normal for the worker to be coupled to the structure of the data because it has to know the structure in order to be able to process it but the delegator, which now becomes a coordinator, doesn't have to know the structure of the data.

Consider the example of an unit that has to parse an HTML element and has to make the following decision:

- if the current node is a

divthenParseDivElement()should be called, - if the current node is a

tablethenParseTableElement()should be called, and - if the current element is none of the above, it should just ignore it.

Put differently than at the beginning of this section, the purpose of Specialized Workers pattern is to:

- delegate the work to a single place, and

- enclose in a single place the knowledge about being able to perform a specific task and the knowledge required to perform that task.

Implementation

Now that we established what Specialized Workers pattern is, let's see how it can be implemented.

It goes without saying that when implementing this pattern, the amount of work directly depends on how many workers the business case requires (i.e. how many switch cases are there) but, in broad lines, to implement the Specialized Workers pattern you'll need to:

- Define a interface for the worker exposing two methods —

CanProcess(), andProcess():

public interface ISpecializedWorker

{

bool CanProcess(Payload payload);

Result Process(Payload payload);

}

- Add a class for each use-case:

internal class MondayPayloadWorker: ISpecializedWorker

{

public bool CanProcess(Payload payload)

{

return payload.DayOfWeek = DayOfWeek.Monday;

}

public Result Process(Payload payload)

{

return ProcessInternal(payload);

}

}

- Inject the workers into the calling class (the

Employer), and delegate:

internal class Employer

{

private readonly IEnumerable<ISpecializedWorker> _workers;

public Employer(IEnumerable<ISpecializedWorker> workers)

{

_workers = workers;

}

public Result Process(Payload payload)

{

var worker = _workers.SingleOrDefault(

w => w.CanProcess(payload));

// This is the equivalent of the default case

// in the switch statement.

if (worker == null)

{

throw new ArgumentException(

"Cannot process the provided payload.");

}

return worker.Process(payload);

}

}

And that's all there is to it. Now, the Employer class is agnostic of how the payload is processed; it just delegates the processing to the worker that can handle it. If no workers that are able to process the payload are found, the Employer class can choose to signal this by either raising an exception, returning a default value or any other mechanism that is suited to the other patterns used in the code-base. More on this in section Adaptations. Furthermore, the Employer class doesn't have to know how many workers are there; as such, the workers can be added or removed without any change to the Employer class, which means that the class is decoupled from the workers.

On the other hand, each worker class is, as the name of the pattern suggests it, specialized to do one thing — work on the specific use-case it knows all about: whether it can process it, and if yes, then it also knows how to process it.

Discussion

How is it different from the Strategy pattern?

At this moment you may be wondering how the Specialized Workers is different from the Strategy pattern? After all, each worker implements and applies a different strategy in the processing of the data.

To put it simply, the Specialized Workers pattern is not different from the Strategy design pattern; it evolves from it, with the added behavior that the caller (coordinator — the Employee class from above) doesn't have to know explicitly which worker to employ. The worker to employ is selected based on its knowledge of the payload (i.e. based on the workers' "expertise") which, as mentioned before, makes the coordinator agnostic of the payload. As such, all the knowledge that is related to how to do a specific processing is kept in the same class where the processing happens.

There is however, a difference in the nomenclature: Strategy is a design pattern whilst Specialized Workers is an implementation pattern. The difference between a design pattern and an implementation pattern deserves a dedicated post but to put it shortly, an implementation pattern tells you how you should write your code while a design pattern specifies how the application should be structured.

Why not use a Factory method?

Okay, you might say, then why not use a Factory method to build directly the worker that knows how to handle the specific use-case?

Well, because the answer to this question is actually one of the benefits that come with implementing the Specialized Workers pattern, namely that it keeps the specialized logic within the same class.

When applying the Factory method, the decision on which instance to build is separated from the actual processing that needs to take place. From the point of view of the separation of concerns this is OK; however one might argue that, in order to decide which worker to build, the factory has to either apply some business knowledge, or be coupled to the data (by being aware of its structure). When applying the Specialized Workers pattern, all the business logic that is coupled to the data is in a single place, i. e. the class of the specialized worker.

Furthermore, when implementing the Specialized Workers pattern, you don't need to create instances of workers by hand as you do with the Factory method; the creation of the workers can be delegated to the Dependency Injection frameworks.

However, if the instantiation of the specialized workers depends o some parameters that cannot be easily built using the Dependency Injection framework, you'll need to use Factory method. In this case you can combine these two approaches: use the Factory method to build the specialized workers, and then pass them to the coordinator class that needs them. Keep in mind that this approach of combining the two patterns works if building each worker is an inexpensive operation; otherwise you'll end-up spending resources to create instances that may not be used.

Drawbacks

As we all know, there are no perfect solutions, especially in software development. This is also the case for the Specialized Workers pattern, and as such, it has a few drawbacks listed below.

No guarantee of the same parameters

The first drawback of the Specialized Workers pattern stems from the fact that the methods CanProcess(), and Process() are not constrained in any way to be called in the specific order they are meant to be called. Furthermore, there is also no guarantee whatsoever that these methods are called with the same parameter. The lack of constraints on the order of the calls, and on the parameters means that the caller may choose to ignore the results of the CanProcess() method, or not call it at all, and then invoke the Process() method.

There are (at least) two ways to work around this misuse: to combine the two methods into a single one, as presented in sub-section Using a single method, and to simply guard against invalid arguments using Debug.Assert() or any of its equivalents:

public Result Process(Payload payload) //

{

Debug.Assert(CanProcess(payload));

// Do the work

}

Sensitivity to collections

While using the Specialized Workers pattern, you should be cautions when calling CanProcess() on collections. Ideally, the method CanProcess() should take the decision without iterating any collection of items. There are two intertwined reasons for that: performance, and lazy loading.

If you have a heterogeneous collection, you can iterate through it in the delegator and call CanProcess() on each item in the collection. At the end, the delegator aggregates the results.

class Employer

{

private readonly IEnumerable<ISpecializedWorker> _workers;

public Employer(IEnumerable<ISpecializedWorker> workers)

{

_workers = workers;

}

public Result ProcessCollection(IEnumerable<Item> collection)

{

var partialResults = new List<Result>();

foreach(var item in collection)

{

var itemResults = _workers

.Where(w => w.CanProcess(item))

.Select(w => w.Process(item));

partialResults.AddRange(itemResults);

}

return partialResults.Aggregate(/*...*/);

}

}

This ensures that the collection is iterated only once thus avoiding any odd results due to lazy evaluation.

Adaptations

Despite its drawbacks, the Specialized Workers pattern is quite flexible in its implementation. As such, it can be adapted for some specific scenarios discussed below. Of course, it goes without saying that the list is not exclusive.

Using a single method

For the cases when the processing is lightweight, you can combine the two methods into a single one that returns a tuple like this:

public (bool canProcess, Result result) Process(Payload payload)

{

if (!CanProcess(payload)) {

return (false, default(Result));

}

Result result = ProcessInternal(payload);

return (true, result);

}

private bool CanProcess(Payload payload)

{

// take decision here

}

As mentioned in the sub-section No guarantee of the same parameters, combining the two methods into a single one guards against calling the CanProcess() and Process() methods in the opposite order or with different parameters.

Non-exclusive workers

You can have multiple workers capable of processing the same payload; in this case, the caller (the delegator) is responsible for aggregating the results:

var results = _workers.Where(w => w.CanProcess(payload))

.Select(w => w.Process(payload))

.ToArray();

Ending thoughts

As you can see from this quite lengthy post, the Specialized Workers pattern, provides both a way to cluster business logic into specialized classes, and a good degree of flexibility in order to adapt the implementation to different situations. Despite its flexibility however, it is my subjective opinion that the original form of the pattern (the one shown in the Implementation section) is the most elegant and eloquent, which is why I use that version most of the time. But, especially as a software developer, I am aware that each person has its own preferences in regards to how something should be done or implemented. As such, I hope you find this pattern useful, and if so, feel free to apply it in whichever way suits you best.

Don't release to Production, release to QA

Automate your release workflow to such extent that the QA engineers from your team become the users of the application.

Introduction

It's Friday afternoon, the end of the sprint, and a few hours before the weekend starts, and the QA engineers are performing the required tests on the last Sprint Backlog Item (SBI). The developer responsible for that item, confident that the SBI meets all acceptance criteria, is already in the weekend mood.

Suddenly, a notification pops up — there is an issue with the feature being tested. The developer jumps on it to see what the problem is, and after discussing with the QA engineer he/she finds out that the issue is caused by some leftover data from the previous SBI.

Having identified the problem, the developer spends a few minutes to craft a SQL script that will clean the data, and gives it to the QA engineer. The QA engineer runs the script on the QA database, starts testing the SBI from the beginning, and then confirms that the system is "back to normal".

Both sigh in relief while the SBI is marked as "Done" and the weekend starts. Bliss!

Getting to the root problem

Although the day and the sprint goal were saved, I would argue that applying the cleanup script just fixed an issue but left the root problem untouched. And to get to the root problem, let's take a closer look on what happened.

The database issue stems from the fact that instead of being kept as close as possible to production data (as the best practices suggest) the database grew to become an entity of its own through not being kept tidy by the team.

When testing a SBI involves changing some of the data from the database, it is not very often that those changes are reverted as soon as the SBI leaves the QA environment. With each such change the two databases (production and QA) grow further and further apart, and the probability of having to apply a workaround script increases each time. However the paradox is that the cleanup script, although it solves the issue, is yet another change to the data which widens even more the gap between QA and production databases.

And there lies our problem: not within the workaround script itself, but within the practice of applying workarounds to patch the proverbial broken pipes instead of building actual deployment pipelines.

But this problem goes one level deeper; sure, we can fix the problem at hand by restoring the database from a production backup but to solve the issue once and for all we need to change how we look at QA environment.

But our root-cause analysis is not complete yet. We can't just say "let's never apply workarounds" because workarounds are some sort of necessary evil. Let's look at why that is, shall we?

Why and when do we apply workarounds in production?

In Production environment a workaround is applied only in critical situations due to high risk of breaking the running system by making ad-hoc changes to it.

Unlike the QA environment where, when the system breaks only a few users are affected — namely the QA engineers, when the system halts in Production the costs of the downtime are much, much higher. An improper or forgotten where condition in a delete script which wipes out whole tables of data, and renders the system unusable, in the happiest case will lead only to frustrated customers that can't use the thing they paid for.

As such, in every critical situation first and foremost comes the assessment: is a workaround really needed?

When the answer is yes (i.e., there is no other way of fixing the issue now), then usually there are some procedures to follow. Sticking with the database script example, the minimal procedure would be to:

- create the workaround script,

- have that script reviewed and approved by at least one additional person, and

- have the script executed on Production by someone with proper access rights.

OK, now we're settled: workarounds are necessary in critical situations, and are applied after assessment, review, and approval. Then, going back to our story, the following question arises:

Why do we apply workarounds in QA environment?

QA environment is isolated from Production environment, and by definition it has way fewer users. Furthermore, those users have a lot of technical knowledge of how the system runs, and always have something else to do (like designing/writing test cases) while the system is being brought to normal again.

Looking from this point of view, there is almost never a critical situation that would require applying a workaround in QA environment.

Sure, missing the sprint goal may seem like a critical situation because commitments are important. But on the other hand, and going back to our example — if we're applying a workaround in QA just to promote some feature towards Production, are we really sure that the feature is ready?

Now that the assessment of criticality is done, let's get back to our topic and ask:

What if we treated QA environment like Production?

Production and QA environments are different (very different I may add); there's no doubt about that. What makes them different, from the point of view of our topic, is the fact that when a feature is deployed in Production environment, all the prerequisites are known, and all preliminary steps are executed.

On the other hand, when deploying to QA environment we don't always have this knowledge, nor do we have all the preliminary steps completed at all times. Furthermore, deploying on QA may require additional steps than on Production, e.g.: restoring the database to the last backup from Production, data anonymization etc.

But the difference between the number of unknowns can be compensated by the difference between number of deployments, and the fact that a failure in QA environment is not critical. In other words, what we lack in knowledge when deploying to QA environment can be compensated by multiple deployment trials, where each deployment trial gets closer and closer to success.

And when it comes to doing repetitive tasks…

Automation is key

To alleviate the difference between (successive) environments you only need to do one thing, although I must say from the start that achieving that one thing can be really hard — automate everything.

If a release workflow is properly (read fully) automated, then the difference between various environments is reduced mainly to:

- The group of people who have proper access rights to run the workflow on the specific environment. With today's' tools on the market the difference becomes simplified further — it is in the group of people that are allowed to see or to push the "Deploy" button.

- The number and order of "Deploy" buttons a person has to push for the deploy to succeed.

Although we strive to have our environments behave all the same, they are still inherently different. As such, it goes without saying that not everyone may have rights to deploy to Production, and — due to some constraints — on some environment there may be additional actions required to deploy. Nonetheless, the objective remains the same: avoid manual intervention as much as possible.

The Snowball Effect

Once achieved, the effects of this objective of having minimal manual intervention ripple through and start a snowball effect.

Efficiency

At first, you gain efficiency — there is no checklist to go through when deploying, no time needed to spend doing the tedious steps of deployment; the computers will perform those steps as quickly as possible and always in the same order without skipping any of them or making the errors that humans usually do when performing tedious work.

With a click of a button, or on a certain event the deployment starts and while it runs the people from the team are free to do whatever they want in the time it takes to deploy: they can have a cup of coffee, can make small talk with a colleague, or can mind the more important business like the overall quality of the product they're working on.

Speed

Furthermore, besides efficiency you can gain speed — just by delegating the deployment process to computers you gain time because computers do boring stuff a lot quicker than humans.

With efficiency and speed comes reduced integration friction. A common effect of reduced integration friction is an increase in integration frequency. High integration frequency coupled with workflow automation leads to an increase in the number of deployments that are made.

And this is where the magic unravels.

Tight feedback loop

Once you automate the repetitive tasks you free-up the time of the QA engineer, which allows him/her to spend more time with the system(s) they are testing. In other words, the time gained through workflow automation is invested into the actual Quality Assurance of the system under test.

And when the QA engineer invests more time into the testing process, he/she will be able to spot more issues. With enough repetitions enabled by quick deployments, the QA engineer acquires a certain amount of skills which will enable him/her to spot defects faster. The sooner a defect is spotted, the sooner it is reported, and subsequently, the sooner it gets fixed.

We have a name for this thing — it's called a feedback loop. The feedback loop is not introduced by automation — it was long present in the project, but once workflow automation is introduced it tightens the feedback loop, which means we, as developers, have to wait less time to see the effects of the changes we introduced into the system. In our specific case, we have to wait less because workflow automation reduces the time of the deployment to QA environment to minimum.

Improved user experience

But wait, there's more! The time that the QA engineer gets to invest into growing his/her skills is spent using the system under test. With more time spent using the system under test, the QA engineer gets closer and closer to the mindset of the real users of the system. And while in this mindset, the QA engineer not only understands what the system does for the user but also understands what the user wants to do.

Of course, this understanding is bound by a certain maximum but nonetheless, the effect it has on the development process is enormous.

First and foremost, there is an increase in the quality of the system: when the QA engineers have a sound understanding of what the user wants to do they will make sure that the system indeed caters to the needs of its users. This in itself is a huge win for the users alone but this also benefits the entire team — the knowledge about the system gets disseminated within the whole team (including developers), and the Product Owner (PO) is not the bottleneck anymore.

Furthermore, with more time spent in the mindset of a user, the QA engineer will start striving for an improved user experience because he/she, like the real users of the system, will strive to do thing faster.

As such, the QA engineer starts suggesting some usability improvements of the system. These improvements are small — e.g., change the order of the menu items, add the ability to have custom shortcuts on the homepage etc, but they do improve the experience of the user.

Sure, all of those changes must be discussed with the team and approved by the PO but those who get approved bring the system closer to what the actual users want.

Allow the QA engineer to be an user of the system

The main role of a QA engineer is to ensure that the system under test satisfies the needs of its users. As such, the QA engineer needs to think like a user, to act like a user, and to be able to quickly shift from the mindset of the user to the mindset of the problem analyst required by the job description.

But if you take from the QA engineer all the hassle of deployment and fiddling with making the system run properly in the testing environment you are unlocking more time for him/her to spend in the mindset of an actual user, and having a user of the system close by is a treasure trove for building it in such a way that the system accomplishes its purpose — catering to the needs of his users.

As a developer, it may be strange to look at your colleague — the QA engineer — like at an user of the system you're both working on. After all, you both know a lot more of what's under the hood of that system for any of you to be considered just a simple user of it.

But it is a change worth making. And, as the saying goes, to change the world you need to start with changing yourself. This change comes when you treat QA environment as production environment and make all the efforts needed to uphold the delivery to QA to the same rigor as delivery to production. In essence, it's nothing but a shift in the mindset that was already mentioned in the title — don't release to Production, release to QA.

Keep the tools separate from the domain of your application

At my previous job we had an Architecture club where we held regular meetings to discuss issues related to the architectural decisions of various applications, be it an application developed within the company or elsewhere. One of the latest topics discussed within the architecture club was whether to use or not MediatR (and implicitly the Mediator pattern) in a project based on CQRS architecture.

If you're not familiar with MediatR, it's a library that relays messages to their destination in an easy to use and elegant manner in two ways: it dispatches requests to their handlers and sends notifications to subscribers.

The Mediator pattern itself is defined as follows:

Define an object that encapsulates how a set of objects interact. Mediator promotes loose coupling by keeping objects from referring to each other explicitly, and it lets you vary their interaction independently.

I'm going to step a bit back and give some additional context regarding this meeting. I wasn't able to attend the meetings of the club since mid February because the meetings overlapped with a class I teach. In an afternoon my colleague George, the founder of the club, told me that the topic of the next meeting will be whether to use or not MediatR and knowing that I use MediatR on my side project it would be nice for me to weigh-in my opinion.

At first, I must confess, I was taken aback — for me there was never a doubt; MediatR is a great tool and should be used in a CQRS architecture. This is why I said I would really like to hear what other members have to say — especially those opposing the use of MediatR.

As the discussion went on I concluded that the problem wasn't whether to use or not to use MediatR but rather how it was used. And it was used as the centerpiece of the big ball of mud.

The discussion started with back-referencing a presentation at a local IT event, where the same topic was put upfront: to use or not to use MediatR? Afterwards, the focus of the discussion switched to a project where the mediator pattern was imposed to be used for every call pertaining to business logic and even more than that — even the calls to AutoMapper were handled via the mediator. In other words, the vast majority of what should have been simple method calls became calls to mediator.Send(new SomeRequest{...}).

In order to avoid being directly coupled to MediatR library the project was hiding the original IMediator interface behind a custom interface (let's call it ICustomMediator) thus ensuring a low coupling to the original interface. The problem is that, although the initial intention was good, the abundance of calls to the custom mediator creates a dependency of application modules upon the custom defined interface. And this is wrong.

Why is that wrong?, you may ask. After all, the Dependency Inversion principle explicitly states that "classes should depend on interfaces" and since in the aforementioned project classes depend on an ICustomMediator interface which doesn't change even when the original interface changes then it's all good, right?

Wrong. That project did not avoid coupling, it just changed the contract it couples to from an interface defined in a third-party library to an interface defined within. That's it; it is still tightly coupled with a single interface. Even worse, that interface has become the centerpiece of the whole application, the God service, which hides the target from the caller behind at least two (vertical) layers and tangles the operations of a business transaction into a big ball of mud. While doing so, it practically obliterates the boundaries between the modules which changes application modules from being highly cohesive pieces to lumps of code which "belong together".

Furthermore, and this is the worst part, the ICustomMediator has changed its role from being a tool which relays commands to their respective handlers to being part of the application domain, i.e. the role of mediator changed from implementation detail to first class citizen of the application. This shift is subtle but dangerous and not that easy to observe because the change creeps in gradually akin to the gradual increase of the temperature of water in the boiling frog fable.

The shift happens because all the classes that need to execute a business operation which is not available as a method to invoke will have a reference to the mediator in order to call the aforementioned operation. As per Law of Demeter (only talk to your immediate friends) that makes the ICustomMediator a friend of all the classes involved in implementing some business logic. And a friend of a domain object (entity, service etc.) is a domain object itself. Or at least it should be.

OK, you might say, then what's the right way to use the mediator here? I'm glad you asked. Allow me to explain myself by taking (again) a few steps back.

Ever since I discovered MediatR I've seen it as the great tool it is. I remember how, while pondering upon the examples from MediatR documentation and how I could adapt those for my project I started running some potential usage scenarios in my head. After a short time some clustering patterns started to emerge from those usage scenarios. The patterns weren't that complicated — a handler that handles a payment registration should somehow belong together with a handler that queries the balance of an account, whereas the handler that deals with customer information should belong somewhere else.

These patterns are nothing else than a high degree of cohesion between each of the classes implementing the IHandler interface from MediatR and to isolate them I've organized each such cluster into a separate assembly.

Having done that another pattern emerged from all the handlers within an assembly: each of the handlers were handling an operation of a single service.

Obviously, the next logical thing was to actually define the service interface which listed all the operations performed by the handlers within that assembly. And since the interface needs an implementation I've created a class for each service which calls mediator.Send() with the proper parameters for the specific handler and returns the results. This is how it looks:

public interface IAccountingService

{

void RegisterPayment(RegisterPaymentCommand paymentDetails);

GetAccountBalanceResponse GetAccountBalance(GetAccountBalanceRequest accountId);

GetAllPaymentsResponse GetAllPayments();

}

class AccountingService: IAccountingService

{

private readonly IMediator _mediator;

public AccountingService(IMediator mediator)

{

_mediator = mediator;

}

public void RegisterPayment(RegisterPaymentCommand paymentDetails)

{

_mediator.Send(paymentDetails);

}

public GetAccountBalanceResponse GetAccountBalance(GetAccountBalanceRequest accountId)

{

return _mediator.Send(GetAccountBalanceRequest);

}

public GetAllPaymentsResponse GetAllPayments()

{

return _mediator.Send(new GetAllPaymentsRequest());

}

}

As a result I do have more boilerplate code but on the upside I have:

- A separation of the domain logic from the plumbing handled by MediatR. If I want to switch the interface implemented by each handler I can use search and replace with a regex and I'm done.

- A cleaner service interface. For the service above, the handler that returns all payments should look like this:

public class GetAllPaymentsRequest: IRequest<GetAllPaymentsResponse>

{

}

public class GetAllPaymentsResponse

{

public IEnumerable<Payment> Payments {get; set;}

}

public GetAllPaymentsRequestHandler: RequestHandler<GetAllPaymentsRequest, GetAllPaymentsResponse>

{

protected override GetAllPaymentsResponse Handle(GetAllPaymentsRequest request)

{

// ...

}

}

In order to call this handler you must provide an empty instance of GetAllPaymentsRequest to mediator but such restriction doesn't need to be imposed on the service interface. Thus, the consumer of IAccountingService calls GetAllPayments() without being forced to provide an empty instance which, from consumers' point of view, is useless.

However, the greatest benefit from introducing this new service is that it is a domain service and does not break the Law of Demeter while abstracting away the technical details. Whichever class will have a reference to an instance of IAccountingService, it will be working with something pertaining to the Accounting domain thus when invoking a method from the IAccountingService it will call a friend.

This pattern also makes the code a lot more understandable. Imposing a service over the handlers that are related to each-other unifies them and makes their purpose more clear. It's easier to understand that I need to call subscriptionsService to get a subscription but it becomes a little more cluttered when I call mediator.Send(new GetSubscriptionRequest{SubscriptionId = id}) because it raises a lot of questions. Who gives me that subscription in the end and where it resides? Is this a database call or a network call? And who the hell is this mediator dude?

Of course, the first two questions may rise when dealing with any interface and they should be always on the mind of programmers because the implementation may affect the performance, but performance concerns aside, it's just easier to comprehend the relationships and interactions when all the details fit together. And in a class dealing with Accounting domain a call to mediator just doesn't fit.

Back to the main point, there's the question of what if I need to make a request through a queue (RabbitMq for example)? Let's assume I have a class which needs to get some details using a call to mediator but afterwards needs to write some data to a queue within the same business transaction. In such case, I have to either:

- inject into my class an instance that knows how to talk to the queue and an instance of mediator or

- have another mediator handler which does the write and perform two separate calls to mediator.

By doing this I'm polluting the application logic with entities like mediator, queue writer etc., entities which are pertaining to application infrastructure not application domain. In other words, they are tools not building blocks. And tools should be replaceable. But how do I replace them if I have references to them scattered all over the code-base? With maximum effort, as Deadpool says.

This is why you need to separate tools from application domain. And this is how I achieved this separation: by hiding the implementation details (i.e. tooling) behind a service interface which brings meaning to the domain. This way, when you change the tools, the meaning (i.e. intention) stays the same.

Acknowledgments

I would like to thank my colleagues for their reviews of this blog post. Also a big thank you goes to all the members of Centric Architecture Club for starting the discussion which led to this blog post.

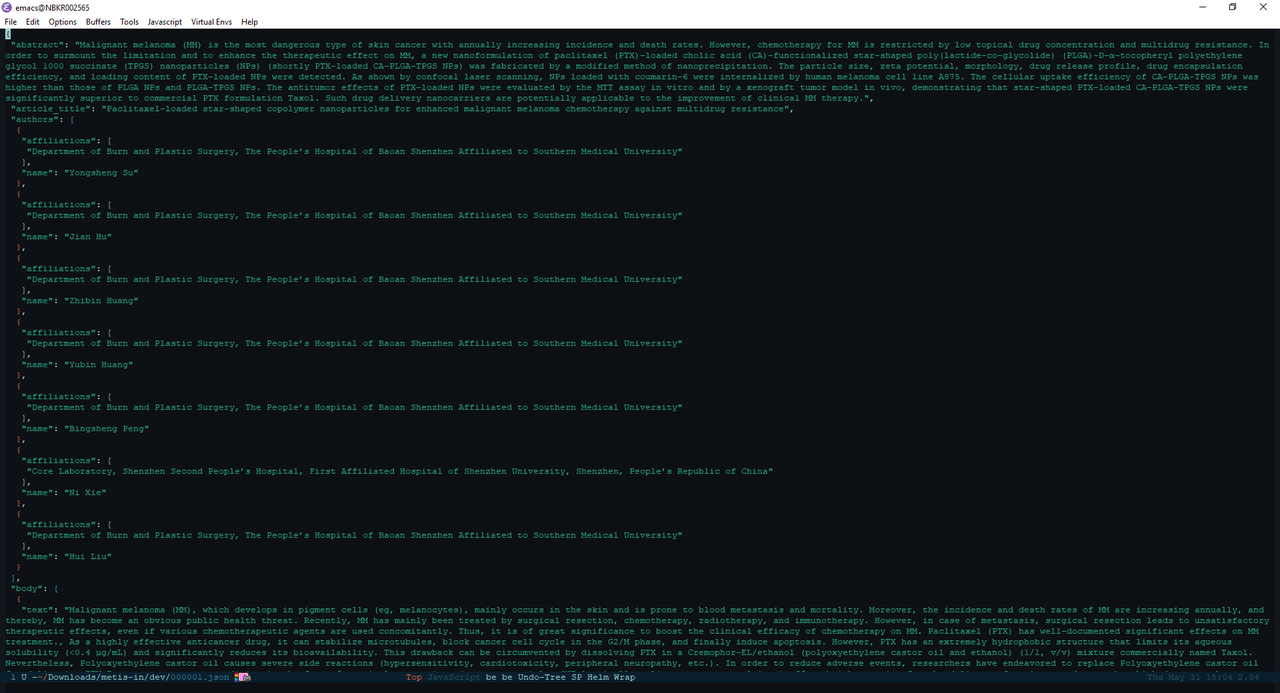

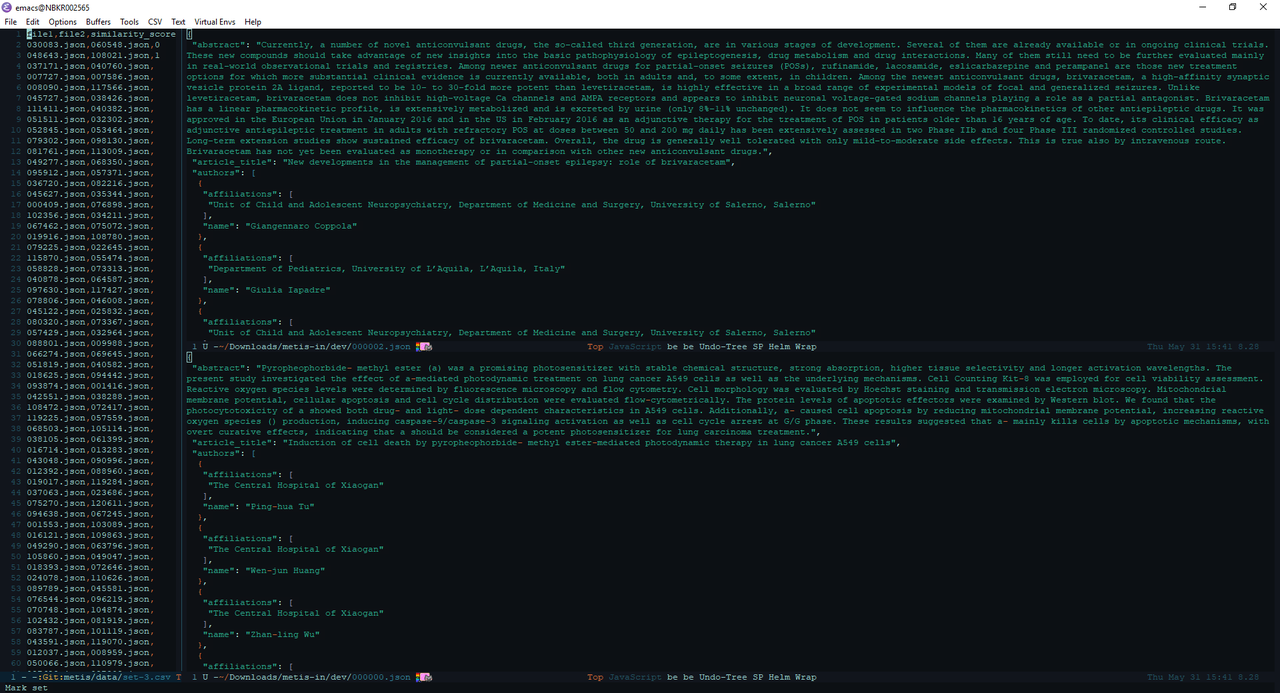

Building a Python IDE with Emacs and Docker

Prologue

I am a fan of Windows Subsystem for Linux. It brings the power of Linux command-line tools to Windows which is something a developer cannot dislike but that isn't the main reason I'm fond of it. I like it because it allows me to run Emacs (albeit in console mode) at its full potential.

As a side-note, on my personal laptop I use Emacs on Ubuntu whereas on the work laptop I use Emacs from Cygwin. And although Cygwin does a great job in providing the powerful Linux tools on Windows, some of them are really slow compared to the native ones. An example of such a tool is git. I heavily use Magit for a lot of my projects but working with it in Emacs on Cygwin is a real pain. Waiting for a simple operation to finish knowing that the same operation completes instantly on Linux is exhausting. Thus, in order to avoid such unpleasant experience whenever I would need to use Magit I would use it from Emacs in Ubuntu Bash on Windows.

Furthermore, I use Ubuntu Bash on Windows to work on my Python projects simply because I can do everything from within Emacs there — from editing input files in csv-mode, to writing code using elpy with jedi and pushing the code to a GitHub repo using magit.

All was good until an update for Windows messed up the console output on WSL which rendered both my Python setup and Emacs unusable. And if that wasn't bad enough, I got affected by this issue before a very important deadline for one of the Python projects.

Faced with the fact that there nothing I could do at that moment to fix the console output and in desperate need for a solution, I asked myself:

Can't I create the same setup as in

WSLusingDocker?

The answer is Yes. If you want to see only the final Dockerfile, head directly to the TL;DR section. Otherwise, please read along. In any case — thanks for reading!

How

Since I already have been using Emacs as a Python IDE in Ubuntu Bash, replicating this setup in Docker would imply:

- Providing remote access via

sshto the container and - Installing the same packages for both the OS and Emacs.

I already knew more or less how to do the later (or so I thought) so obviously I started with the former: ssh access to a Docker container.

Luckily, Docker already has an example of running ssh service so I started with the Dockerfile provided there. I copied the instructions into a local Dockerfile, built the image and ran the container. But when I tried to connect to the container I ran into the first issue addressed in this post:

Issue #1: SSHD refuses connection

This one was easy — there's a typo in the example provided by Docker. I figured it out after inspecting the contents of sshd_config file.

After a while I noticed that the line containing PermitRootlogin was commented-out and thus sed wasn't able to match the pattern and failed to replace the permission.

Since I was connecting as root the sshd refused connection.

The fix for this is to include the # in the call to sed as below:

RUN sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

Having done the change, I rebuilt the image and started the container. As the tutorial mentioned, I ran in console docker port <container-name> 22. This command gave me the port on which to connect so I ran ssh root@localhost -p <port>.

Success.

Even though the sshd was running and accepting connections, the fact that the root password was hard-coded in plain text really bothered me so I made a small tweak to the Dockerfile:

ARG password

RUN echo "root:${password}" | chpasswd

What this does is it declares a variable password whose value is supplied when building the image like this:

docker build -t <image-tag> \

--build-arg password=<your-password-here> \

.

This way, the root password isn't stored in clear text and in plain-sight anymore. Now I was ready to move to the next step.

Issue #2: Activating virtual environment inside container

The second item of my quest was to setup and activate a Python virtual environment. This environment will be used to install all the dependencies required for the project I'm working on.

Also, this environment will be used by Emacs and elpy to provide the features of an IDE.

A this point I asked myself: do I actually need a virtual environment? The Ubuntu Docker image comes with Python preinstalled so why not install the dependencies system-wide? After all, Docker containers and images are somewhat disposable — I can always recreate the image and start a new container for another project.

I decided I need a virtual environment because otherwise things would get messy and I like well organized stuff.

So I started looking out how to setup and activate a virtual environment inside a Docker container. And by looking up I mean googling it or, in my case — googling it with Bing.

I got lucky since one of the first results was the article that led to my solution: Elegantly activating a virtualenv in a Dockerfile. It has a great explanation of what needs to be done and what's going under the hood when activating a virtual environment.

The changes pertaining to my config are the following:

ENV VIRTUAL_ENV=/opt/venv

RUN python3 -m virtualenv --python=/usr/bin/python3 $VIRTUAL_ENV

ENV PATH="$VIRTUAL_ENV/bin:$PATH"

RUN pip install --upgrade pip setuptools wheel && \

pip install numpy tensorflow scikit-learn gensim matplotlib pyyaml matplotlib-venn && \

pip install elpy jedi rope yapf importmagic flake8 autopep8 black

As described in the article linked above, activating a Python virtual environment in its essence is just setting some environment variables.

What the solution above does is to define where the virtual environment will be created and store it into the VIRTUAL_ENV variable. Next, create the environment at the specified path using python3 -m virtualenv $VIRTUAL_ENV. The --python=/usr/bin/python3 argument just makes sure that the python interpreter to use is indeed python3.

Activating the virtual environment means just prepending its bin directory to the PATH variable: ENV PATH="$VIRTUAL_ENV/bin:$PATH".

Afterwards, just install the required packages as usual.

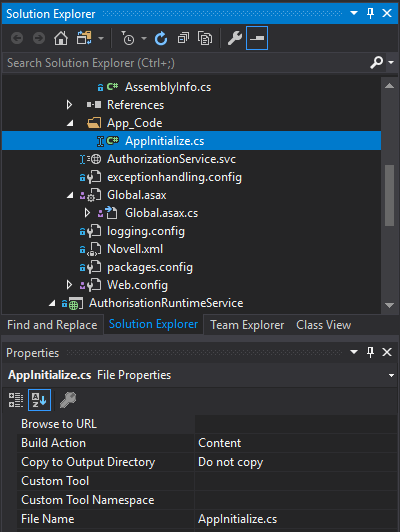

Issue #3: Emacs monolithic configuration file

After setting up and activating the virtual environment, I needed to configure Emacs for python development to start working.

Luckily, I have my Emacs (semi-literate) config script in a GitHub repository and all I need to do is jut clone the repo locally and everything should work. Or so I thought.

I cloned the repository containing my config, which at that time was just a single file emacs-init.org bootstrapped by init.el, logged into the container and started Emacs.

After waiting for all the packages to install I was greeted by a plethora of errors and warnings: some packages were failing to install due to being incompatible with the Emacs version installed in the container, some weren't properly configured to run in console and so on and so forth.

Not willing to spend a lot of time on this (I had a deadline after all) I decided to take a shortcut: why don't I just split the configuration file such that I would be able to only activate packages related to Python development? After all, the sole purpose of this image is to have a setup where I can do some Python development the way I'm used to. Fortunately, this proved to be a good decision.

So I split my emacs-init.org file into four files: one file for tweaks and packages that I want to have everywhere, one file for org-mode related stuff, one file for Python development and lastly one file for tweaks and packages that I would like when I'm using Emacs GUI. The init.el file looked like this:

(require 'package)

(package-initialize)

(org-babel-load-file (expand-file-name "~/.emacs.d/common-config.org"))

(org-babel-load-file (expand-file-name "~/.emacs.d/python-config.org"))

(org-babel-load-file (expand-file-name "~/.emacs.d/org-config.org"))

(org-babel-load-file (expand-file-name "~/.emacs.d/emacs-init.org"))

Now I can use sed on the init.el file to delete the lines that were loading troublesome packages:

sed -i '/^.*emacs-init.*$/d' ./.emacs.d/init.el && \

sed -i '/^.*org-config.*$/d' ./.emacs.d/init.el

After starting a container from the new image I started getting some odd errors about failing to verify package signature. Again, googling the error message with Bing got me a quick-fix: (setq package-check-signature nil). This fix is actually a security risk but since it would be applied to an isolated environment I didn't bother looking for a better way.

However, another problem arose — how can I apply this fix without committing it to the GitHub repository?

Looking back at how I used sed to remove some lines from init.el file one of the first ideas that popped into my head was to replace an empty line from init.el with the quick-fix, but after giving it some more thought I decided to use a more general solution that involves a little bit of (over) engineering.

Since I'm interested in altering Emacs behavior before installing packages it would be good to have a way to inject more Lisp code than a single line. Furthermore, in cases where such code consists of multiple lines I could just add it using Dockers' ADD command instead of turning into a maintenance nightmare with multiple sed calls.

Don't get me wrong: sed is great but I prefer to have large chunks of code in a separate file without the added complexity of them being intertwined with sed calls.

The solution to this problem is quite simple: before loading configuration files, check if a specific file exists; in my case it would be config.el (not a descriptive name, I know) located in .emacs.d directory. If file exists load it. Afterwards load the known configuration files. And since we're doing this, why not do the same for after loading the known configuration files?

Thus, the resulting init.el looks like this (I promise to fix those names sometimes):

(require 'package)

(package-initialize)

(let ((file-name (expand-file-name "config.el" user-emacs-directory)))

(if (file-exists-p file-name)

(load-file file-name)))

(org-babel-load-file (expand-file-name "~/.emacs.d/common-config.org"))

(org-babel-load-file (expand-file-name "~/.emacs.d/python-config.org"))

(org-babel-load-file (expand-file-name "~/.emacs.d/org-config.org"))

(org-babel-load-file (expand-file-name "~/.emacs.d/emacs-init.org"))

(let ((file-name (expand-file-name "after-init.el" user-emacs-directory)))

(if (file-exists-p file-name)

(load-file file-name)))

Now I just need to create the file and apply the fix:

echo "(setq package-check-signature nil)" >> ./.emacs.d/config.el

And since I can run custom code after loading the known configuration files I can set elpy-rpc-virtualenv-path variable in the same way:

echo "(setq elpy-rpc-virtualenv-path \"$VIRTUAL_ENV\")" >> ./.emacs.d/after-init.el

The Dockerfile code for this section is below:

RUN cd /root/ && \

git clone https://github.com/RePierre/.emacs.d.git .emacs.d && \

echo "(setq package-check-signature nil)" >> ./.emacs.d/config.el && \

sed -i '/^.*emacs-init.*$/d' ./.emacs.d/init.el && \

sed -i 's/(shell . t)/(sh . t)/' ./.emacs.d/common-config.org && \

sed -i '/^.*org-config.*$/d' ./.emacs.d/init.el && \

sed -i 's/\:defer\ t//' ./.emacs.d/python-config.org && \

echo "(setq elpy-rpc-virtualenv-path \"$VIRTUAL_ENV\")" >> ./.emacs.d/after-init.el

It does one more thing not mentioned previously: a sed call to remove lazy loading of packages from python-config.org file.

Issue #4: Using SSH keys to connect to GitHub

Now that I have Emacs running on Ubuntu (albeit terminal only) I can enjoy a smooth workflow without having to wait too much for Magit or other application that took forever on Cygwin to finish.

But there's an issue. I mount the repository I'm working on as a separate volume in the Docker container which allows Magit to read all required info (like user name etc.) directly from the repository. However, I cannot push changes to GitHub because I'm not authorized.

To authorize the current container to push to GitHub I need to generate a pair of keys for the SSH authentication on GitHub. But this can become, again, a maintenance chore: for each new container I need to create the keys, add them to my GitHub account and remember to delete them when I'm finished with the container.

Instead of generating new keys each time, I decided to reuse the keys I already added to my GitHub account; the image I'm building will not leave my computer so there's no risk of someone getting ahold of my keys.

I found how to do so easily: there's a StackOverflow answer for that. Summing it up is that you need to declare two build arguments that will hold the values for the private and public keys and write the values to their respective files. Of course, this implies creating the proper directories and assigning proper rights to the files. As an added bonus, the answer shows a way to add GitHub to the known hosts. This is how it looks in the Dockerfile:

ARG ssh_prv_key

ARG ssh_pub_key

RUN mkdir -p /root/.ssh && \

chmod 0700 /root/.ssh && \

ssh-keyscan github.com > /root/.ssh/known_hosts

To provide the values for the keys use --build-arg parameter when building your image like this:

docker build -t <image-tag> \

--build-arg ssh_prv_key="$(cat ~/.ssh/id_rsa)" \

--build-arg ssh_pub_key="$(cat ~/.ssh/id_rsa.pub)" \

.

Issue #5: Install Emacs packages once and done

After another build of the Docker image I started a container from it, logged in via ssh into the container, started Emacs and noticed yet another issue.

The problem was that at each start of the container I had to wait for Emacs to download and install all the packages from the configuration files which, as you can guess may take a while.

Since looking-up the answer on the Web did not return any meaningful results I started refining my question to the point where I came-up with the answer. Basically, when after several failed attempts I started typing in the search bar how to load Emacs packages in background I remembered reading somewhere that Emacs can be used in a client-server setup where the server runs in background.

This is a feature of Emacs called daemon mode. I have never used it myself but went on a whim and decided to try it just to see what would happen.

So I changed my Dockerfile to start Emacs as a daemon:

RUN emacs --daemon

And to my great surprise, when rebuilding the image I saw the output of Emacs packages being downloaded and installed.

Issue #6: Terminal colors

Being confident that everything should work now (it's the same setup I had on WSL) I started a new container to which I mounted the GitHub repo as a volume and got cracking.

Everything went swell until I decided to commit the changes and invoked magit-status. Then I got a real eyesore: the colors of the text in the status buffer were making it really hard to understand what changed and where.

At this point I just rage-quit and started looking for an answer. Fortunately, the right StackOverflow answer popped up quickly and I applied the fix which just sets the TERM environment variable:

ENV TERM=xterm-256color

And only after this, I was able to fully benefit from having the Python IDE I'm used to on a native platform.

TL;DR

The full Dockerfile described in this post is below:

FROM ubuntu:18.04

RUN apt-get update && \

apt-get install -y --no-install-recommends openssh-server tmux \

emacs emacs-goodies.el curl git \

python3 python3-pip python3-virtualenv python3-dev build-essential

ARG password

RUN mkdir /var/run/sshd

RUN echo "root:${password}" | chpasswd

RUN sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

# SSH login fix. Otherwise user is kicked off after login

RUN sed 's@session\s*required\s*pam_loginuid.so@session optional pam_loginuid.so@g' -i /etc/pam.d/sshd

ENV NOTVISIBLE "in users profile"

RUN echo "export VISIBLE=now" >> /etc/profile

# From https://pythonspeed.com/articles/activate-virtualenv-dockerfile/

ENV VIRTUAL_ENV=/opt/venv

RUN python3 -m virtualenv --python=/usr/bin/python3 $VIRTUAL_ENV

ENV PATH="$VIRTUAL_ENV/bin:$PATH"

RUN pip install --upgrade pip setuptools wheel && \

pip install numpy tensorflow scikit-learn gensim matplotlib pyyaml matplotlib-venn && \

pip install elpy jedi rope yapf importmagic flake8 autopep8 black

RUN cd /root/ && \

git clone https://github.com/RePierre/.emacs.d.git .emacs.d && \

echo "(setq package-check-signature nil)" >> ./.emacs.d/config.el && \

sed -i '/^.*emacs-init.*$/d' ./.emacs.d/init.el && \

sed -i 's/(shell . t)/(sh . t)/' ./.emacs.d/common-config.org && \

sed -i '/^.*org-config.*$/d' ./.emacs.d/init.el && \

sed -i 's/\:defer\ t//' ./.emacs.d/python-config.org && \

echo "(setq elpy-rpc-virtualenv-path \"$VIRTUAL_ENV\")" >> ./.emacs.d/after-init.el

# From https://stackoverflow.com/a/42125241/844006

ARG ssh_prv_key

ARG ssh_pub_key

# Authorize SSH Host

RUN mkdir -p /root/.ssh && \

chmod 0700 /root/.ssh && \

ssh-keyscan github.com > /root/.ssh/known_hosts

# Add the keys and set permissions

RUN echo "$ssh_prv_key" > /root/.ssh/id_rsa && \

echo "$ssh_pub_key" > /root/.ssh/id_rsa.pub && \

chmod 600 /root/.ssh/id_rsa && \

chmod 600 /root/.ssh/id_rsa.pub

RUN emacs --daemon

# Set terminal colors https://stackoverflow.com/a/64585/844006

ENV TERM=xterm-256color

EXPOSE 22

CMD ["/usr/sbin/sshd", "-D"]

To build the image use this command:

docker build -t <image-tag> \

--build-arg ssh_prv_key="$(cat ~/.ssh/id_rsa)" \

--build-arg ssh_pub_key="$(cat ~/.ssh/id_rsa.pub)" \

--build-arg password=<your-password-here> \

.

Epilogue

Looking back at this sort of quest of mine, I have nothing else to say than it was, overall, a fun experience.

Sure, it also has some additional benefits that are important in my day-to-day life as a developer: I got a bit more experience in building Docker images and I got to learn a big deal of stuff. It is also worth noting that this setup did help me a lot in meeting the deadline, a fact which by itself states how much of an improvement this setup is (also taking in consideration the time I've spent to make it work).

But the bottom line is that it was a great deal of fun involved which luckily resulted in a new tool in my shed — while working on this post, I used this setup as the default for all new Python experiments and I will probably use it for future projects as well.

References

Acknowledgments

I would like to thank my colleague Ionela Bărbuță for proofreading this post and for the tips & tricks she gave me in order to improve my writing.

Declutter the way of working

Like many other people I strive to be as productive as I can. Being productive means two things for me:

- Getting the most out of my tools and,

- Spending less time on useless things.

Getting the most of my tools is the reason I continuously invest in learning Emacs (which has proven to have a great return of investment) and in learning Visual Studio shortcuts. It was for the same reason I was happy like a kid with a chocolate bar when Visual Studio introduced the Ctrl+Q shortcut for the Quick Launch menu which allowed me to launch various utilities from Visual Studio without bothering to learn shortcuts that aren't often used and without spending time navigating through the menu items looking for the one I had in mind.

Spending less time on useless things means having a good workflow and I was not happy with mine. And my workflow was quite simple — after getting to work, I would start my workstation, open Outlook and whatever instant messaging applications I needed to use, open Visual Studio, get latest sources from TFS, open required solutions and start coding. In between any of those steps I would make myself a cup of tea from which I would sip occasionally while working. It took me some time to do all those required steps before being able to actually read, write or debug some piece of code and albeit in time I got ahold of more powerful machines which loaded things faster, I wasn't happy with the sensation of having to wait to start working.

I started thinking on how to avoid waiting and I turned to the simplest solution for my problem — for every application that I needed to start at the beginning of the day I would put a shortcut in the Windows startup folder. This way, after getting to work I needed to boot my computer, log in and go make the tea while the applications were loading automatically. I couldn't load all the required applications that way — most of the time I needed to run Visual Studio with elevated permissions which halted the load of other applications due to the UAC settings — but I was happy to open all other applications automatically while making my tea and open Visual Studio manually.

Meanwhile I switched the workstation with a laptop and got the opportunity to work on a data science project at work, alongside the main project I'm assigned to. Having a laptop brings a great deal of flexibility and having a high-performance laptop with great battery life tends to make me want to work on it for all my projects.

And that's when things started to get complicated workflow-wise. The two projects I work on at my job have different technology stacks (C# with .net core on the main project and Python with Azure ML for the data science one) which means different workflows but still using the same applications for communication and other secondary tasks. In my spare time — early in the morning or late at night — I work on personal projects and that's when I feel the most infuriated with the plethora of applications that are starting automatically but are totally useless at that moment and do nothing more than consume resources.

At some point I realized that the time I used to spend a while ago to open the applications I needed, I now waste on closing the applications which are configured to start automatically but are of no use to me. Then I remembered that a while ago I read a story about Russian DevOps engineer who would automate a task if it required more that 1.5 minutes of his time (the English version and implementations of the scripts are available on GitHub). That story got me thinking:

Can I change which applications are loaded automatically based on the project I'm working?

Unfortunately, the answer is no. That's because my laptop won't know on which project I'll be working next. But my schedule might give a hint: all week except Thursday, from 9:00 to 18:00 I work on the main project at my job, on Thursdays from 9:00 to 18:00 I work on a secondary project and outside business hours I work on my other projects.

OK, and how do I use that information? Up until now I used to place shortcuts in Windows startup folder but that doesn't do it for me anymore. The problem with placing shortcuts in Windows startup folder is that there's no way to specify when to start the application — it will start all the time.

So I had to look elsewhere for a solution. The next place I looked was Windows Task Scheduler which provides more options for starting a task but unfortunately the triggers of Task Scheduler are either too simple to encode the ranges from my schedule or too complicated to bother with.

Thinking of how to make this decision simple I turned to PowerShell. I created two scripts, in each of them testing the following conditions:

- Is current time between 9:00 and 18:00 and current day is a work day but not Thursday? If yes, then this means I'm at my job, working for the main project and thus:

- The first script will:

- navigate to the directory mapped to TFS project,

- get latest version and